2. User Guide¶

2.1. Introduction to High-Level Synthesis¶

High-level synthesis (HLS) refers to the synthesis of a hardware circuit from a software program specified in a high-level language, where the hardware circuit performs the same functionality as the software program. For LegUp, the input is a C/C++-language program, and the output is a circuit specification in the Verilog hardware description language. The LegUp-generated Verilog can be given to Libero to be programmed on a Microchip FPGA. The underlying motivation for HLS is to raise the level of abstraction for hardware design, by allowing software methodologies to be used to design hardware. This can help to shorten design cycles, improve design productivity and reduce time-to-market.

While a detailed knowledge of HLS is not required to use LegUp, it is worthwhile to highlight the key steps involved in converting software to hardware. The four main steps involved in HLS are allocation, scheduling, binding, and RTL generation, which runs one after another (i.e., binding runs after scheduling is done).

- Allocation: The allocation step defines the constraints on the generated hardware, including the number of hardware resources of a given type that may be used (e.g. how many divider units may be used, the number of RAM ports, etc.), as well as the target clock period for the hardware, and other user-supplied constraints.

- Scheduling: Software programs are written without any notion of a clock or finite state machine (FSM). The scheduling step of HLS bridges this gap, by assigning the computations in the software to occur in specific clock cycles in hardware. With the user-provided target clock period constraint (e.g. 10 ns), scheduling will assign operations into clock cycles such that the operations in each cycle does not exceed the target clock period, in order to meet the user constraint. In addition, the scheduling step will ensure that the data-dependencies between the operations are met.

- Binding: While a software program may contain an arbitrary number of operations of a given type (e.g. multiplications), the hardware may contain only a limited number of units capable of performing such a computation. The binding step of HLS is to associate (bind) each computation in the software with a specific unit in the hardware.

- RTL generation: Using the analysis from the previous steps, the final step of HLS is to generate a description of the circuit in a hardware description language (Verilog).

Executing computations in hardware brings speed and energy advantages over performing the same computations in software running on a processor. The underlying reason for this is that the hardware is dedicated to the computational work being performed, whereas a processor is generic and has the inherent overheads of fetching/decoding instructions, loading/storing from/to memory, etc. Further acceleration is possible by exploiting hardware parallelism, where computations can concurrently. With LegUp, one can exploit four styles of hardware parallelism, which are instruction-level, loop-level, thread-level, and function-level parallelism.

2.1.1. Instruction-level Parallelism¶

Instruction-level parallelism refers to the ability to concurrently execute computations for instructions concurrently by analyzing data dependencies. Computations that do not depend on each other can be executed at the same time. Consider the following code snippet which performs three addition operations.

z = a + b;

x = c + d;

q = z + x;

...

Observe that the first and second additions do not depend on one another. These additions can therefore be executed concurrently, as long as there are two adder units available in the hardware. LegUp automatically analyzes the dependencies between computations in the software to exploit instruction-level parallelism in the generated hardware. The user does not need to do anything. In the above example, the third addition operation depends on the results of the first two, and hence, its execution cannot be done in parallel with the others. Instruction-level parallelism is referred to as fine-grained parallelism, as concurrency is achieved at a fine-grained level (instruction-level) of granularity.

2.1.2. Loop-level Parallelism¶

In software, the majority of runtime can be spent on loops, where loop iterations execute sequentially. That is, loop iteration i needs to finish before iteration i + 1 can start. With LegUp, it is possible to overlap the execution of a loop iteration with another iterations using a technique called loop pipelining (see Loop Pipelining). Now, imagine a loop with N iterations, where each iteration takes 100 clock cycles to complete. In software, this loop would take 100N clock cycles to execute. With loop pipelining in hardware, the idea is to execute a portion of a loop iteration i and then commence executing iteration i + 1 even before iteration i is complete. If loop pipelining can commence a new loop iteration every clock cycles, then the total number of clock cycles required to execute the entire loop be N + (N-1) cycles – a significant reduction relative to 100N. The (N-1) cycles is because each successive loop iteration start 1 cycle after the previous iteration, hence the last loop starts after (N-1) cycles.

A user can specify a loop to be pipelined with the use of the loop pipeline pragma. By default, a loop is not pipelined automatically.

2.1.3. Thread-level Parallelism¶

Modern CPUs have multiple cores that can be used to concurrently execute multiple threads in software. POSIX threads (Pthreads), is one the widely used multi-threading techniques in C/C++, where, parallelism is realized at the granularity of entire C/C++ functions. Hence thread-level parallelism is referred to as coarse-grained parallelism since one or more functions execute in parallel. LegUp supports hardware synthesis of Pthreads, where concurrently executing Pthreads in software are synthesized into concurrently executing hardware units (see Multi-threading with Pthreads). This allows a software developer to take advantage of spatial parallelism in hardware using a familiar parallel programming paradigm in software. Moreover, the parallel execution behaviour of Pthreads can be debugged in software, it is considerably easier than debugging in hardware.

In a multi-threaded software program, synchronization between the threads can be important, with the most commonly used synchronization constructs being mutexes and barriers. LegUp supports the synthesis of mutexes and barriers into hardware.

2.1.4. Data Flow (Streaming) Parallelism¶

The second style of coarse-grained parallelism is referred to as data flow parallelism. This form of parallelism arises frequently in streaming applications, and are commonly used for video/audio processing, machine learning, and computational finance. In such applications, there is a stream of input data that is fed into the application at regular intervals. For example, in an audio processing application, a digital audio sample may be given to the circuit every clock cycle. In streaming applications, a succession of computational tasks is executed on the stream of input data, producing a stream of output data. For example, the first task may be to filter the input audio to remove high-frequency components. Subsequently, a second task may receive the filtered audio, and boost the bass low-frequency components. Observe that, in such a scenario, the two tasks may be overlapped with one another. Input samples are continuously received by the first task and given to the second task.

LegUp provides a way for a developer to specify data flow parallelism through the use of function pipelining (see Function Pipelining) and/or Pthreads (see Data Flow Parallelism with Pthreads) with LegUp’s FIFO library (see Streaming Library) used to connect the streaming modules.

2.2. LegUp Overview¶

LegUp accepts a C/C++ software program as input and automatically generates hardware described in Verilog HDL (hardware description language) that can be programmed onto a Microchip FPGA. The generated hardware can be imported as an HDL+ component into SmartDesign with a Tcl script that is also generated by LegUp.

In a software program, user first needs to specify a top-level function (during project creation in the LegUp IDE or in the source code with our pragma, #pragma LEGUP function top <function_name> ). Please refer to the Specifying the Top-level Function section for more details specifying the top-level function.

Then the following button, Compile Software to Hardware can be clicked to compile software to hardware:

This will compile the top-level function and all of its descendant functions into hardware. The rest of the program (outside the top-level function) is considered as the software testbench, to give inputs into the top-level function and verify outputs from the top-level function (and its descendants). The software testbench is used to automatically generate the RTL testbench and stimulus for SW/HW Co-Simulation.

2.3. LegUp Pragmas¶

Pragmas can be applied to the software code by the user to apply HLS optimization techniques and/or guide the compiler for hardware generation. They are applied directly on the applicable software construct (i.e., function, loop, argument, array) to specify a certain optimization for them. For example, to apply pipelining on a loop:

#pragma LEGUP loop pipeline

for (i = 1; i < N; i++) {

a[i] = a[i-1] + 2

}

For more details on the supported pragmas, please refer to LegUp Pragmas Manual. For more details on loop pipelining, please refer to Loop Pipelining.

2.4. LegUp Constraints¶

LegUp also supports user constraints to guide hardware generation.

Whereas pragmas are applied directly on the source code for optimizations that are specific and local to the software construct that it is being applied on (function, loop, memory, argument, etc),

constraints are used for settings that will be globally applied to the entire program (i.e., setting the target FPGA, target clock period).

Each project specifies its constraints in the config.tcl file in the project directory.

This file is automatically generated by the LegUp IDE. To modify the constraints, click the HLS Constraints button:

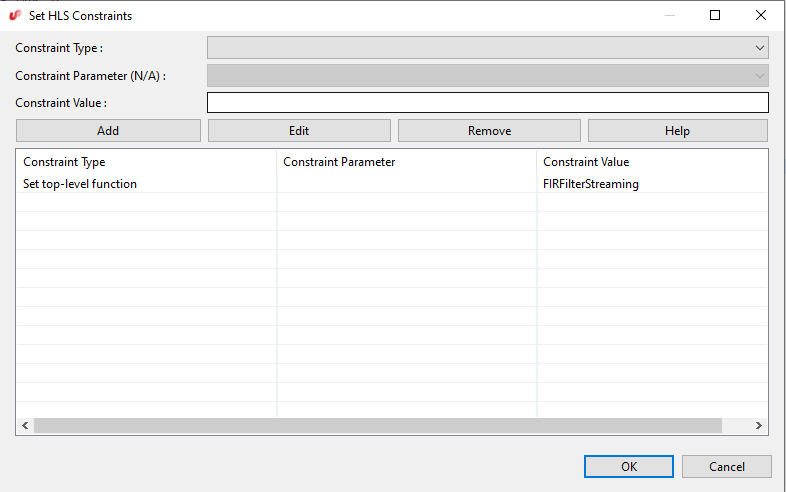

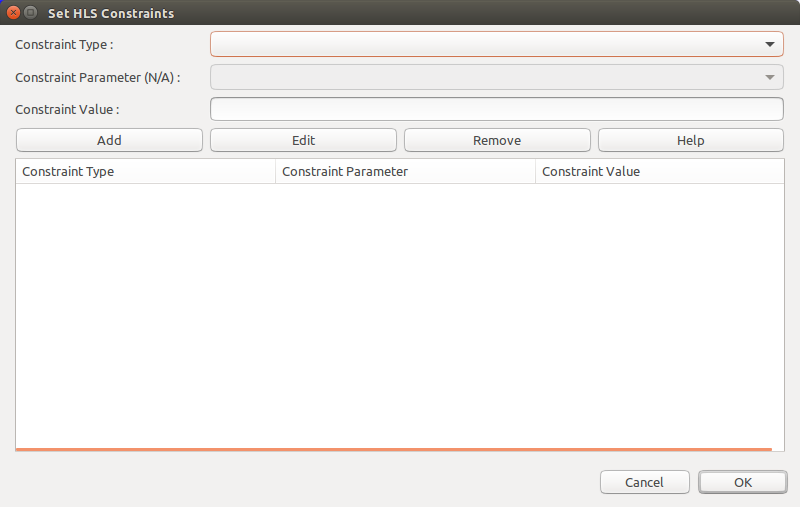

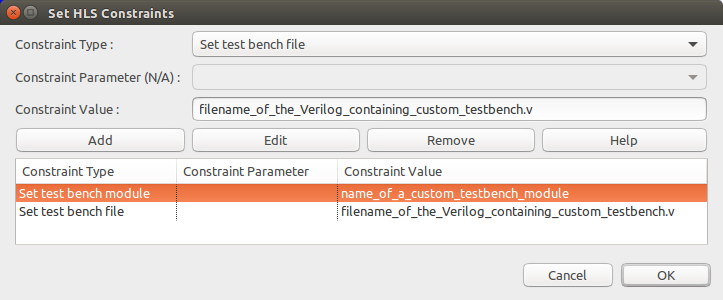

The following window will open:

You can add, edit, or remove constraints from this window. Select a constraint type from the first drop-down menu. If you want more information about a constraint, click the Help button, which will open the corresponding Constraints Manual page.

An important constraint is the target clock period (shown as Set target clock period in the drop-down menu).

With this constraint, LegUp schedules the operations of a program to meet the specified clock period.

When this constraint is not given, LegUp uses the default clock period for each device, as shown below.

| FPGA Vendor | Device | Default Clock Frequency (MHz) | Default Clock Period (ns) |

|---|---|---|---|

| Microsemi | PolarFire | 100 | 10 |

| Microsemi | SmartFusion2 | 100 | 10 |

Details of all LegUp constraints are given in the Constraints Manual.

2.5. Specifying the Top-level Function¶

When compiling software to hardware with LegUp, you must specify the top-level function for your program.

Then LegUp will compile the specified top-level function and all of its descendant functions to hardware.

The remainder of the program (i.e., parent functions of the top-level function, typically the main function)

becomes a software testbench that is used for SW/HW Co-Simulation.

If there are multiple functions to be compiled to hardware, you should create a wrapper function that calls all of the

desired functions.

There are two ways to specify the top-level function.

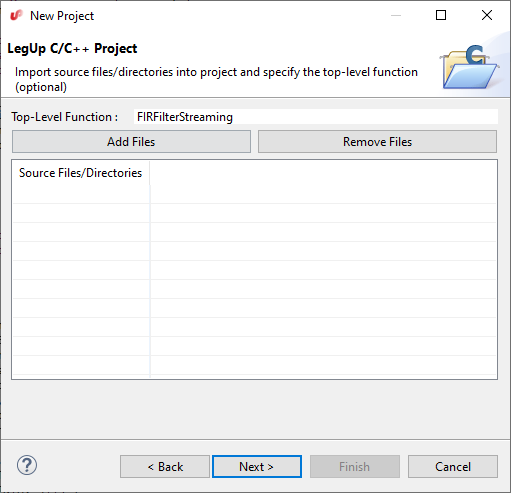

The first way is to specify it during project creation in the LegUp IDE, as shown below.

This will save the top-level function constraint into the config.tcl. After creating the project, if you open up the HLS Constraints window, the top-level function should show there.

You can edit or remove the function from this window.

Alternatively, the top-level function can also be specified with the pragma, #pragma LEGUP function top, directly on the source code, below the function prototype, as shown below:

void top(int a, int b) {

#pragma LEGUP function top

...

...

}

Note

Please note that you cannot specify the top-level function using both the pragma and in project creation/HLS Constraints window. If you have specified the top-level function during project creation, you should not specify it again with the pragma. If you want to use the pragma, you should leave the Top-Level Function box empty during project creation or remove the specified top-level function in the HLS Constraints window.

2.6. SW/HW Co-Simulation¶

The circuit generated by LegUp should be functionally equivalent to the input software. Users should not modify the generated Verilog, as it is overwritten every time LegUp runs.

SW/HW co-simulation can be used to verify that the generated hardware produces the same outputs for the same inputs as software. With SW/HW co-simulation, user does not have to write their own RTL testbench, as it is automatically generated. If user already has their own custom RTL testbench, one can optionally choose their custom RTL testbench (Specifying a Custom Test Bench) and not use SW/HW co-simulation.

To use SW/HW co-simulation, the input software program will be composed of two parts,

- A top-level function (and its descendant functions) to be synthesized to hardware by LegUp,

- A C/C++ testbench (the parent functions of the top-level function, typically

main()) that invokes the top-level function with test inputs and verifies outputs.

SW/HW co-simulation consists of the following automated steps:

- LegUp runs your software program and saves all the inputs passed to the top-level function.

- LegUp automatically creates an RTL testbench that reads in the inputs from step 1 and passes them into the LegUp-generated hardware module.

- ModelSim simulates the testbench and saves the LegUp-generated module outputs.

- LegUp runs your software program again, but uses the simulation outputs as the output of your top-level function.

You should write your C/C++ testbench such that the main() function returns a 0 when all outputs from the top-level function are as expected and otherwise return a non-zero value. We use this return value to determine whether the SW/HW co-simulation has passed.

In step 1, we verify that the program returns 0.

In step 4, we run the program using the outputs from simulation and if the LegUp-generated circuit matches the C program then main() should still return 0.

If the C/C++ program matches the RTL simulation then you should see: SW/HW co-simulation: PASS

For any values that are shared between software testbench and hardware functions (top-level and descendants), you can either pass in as arguments into the top-level function, or if it is a global variable, it can be directly accessed without being passed in as an argument.

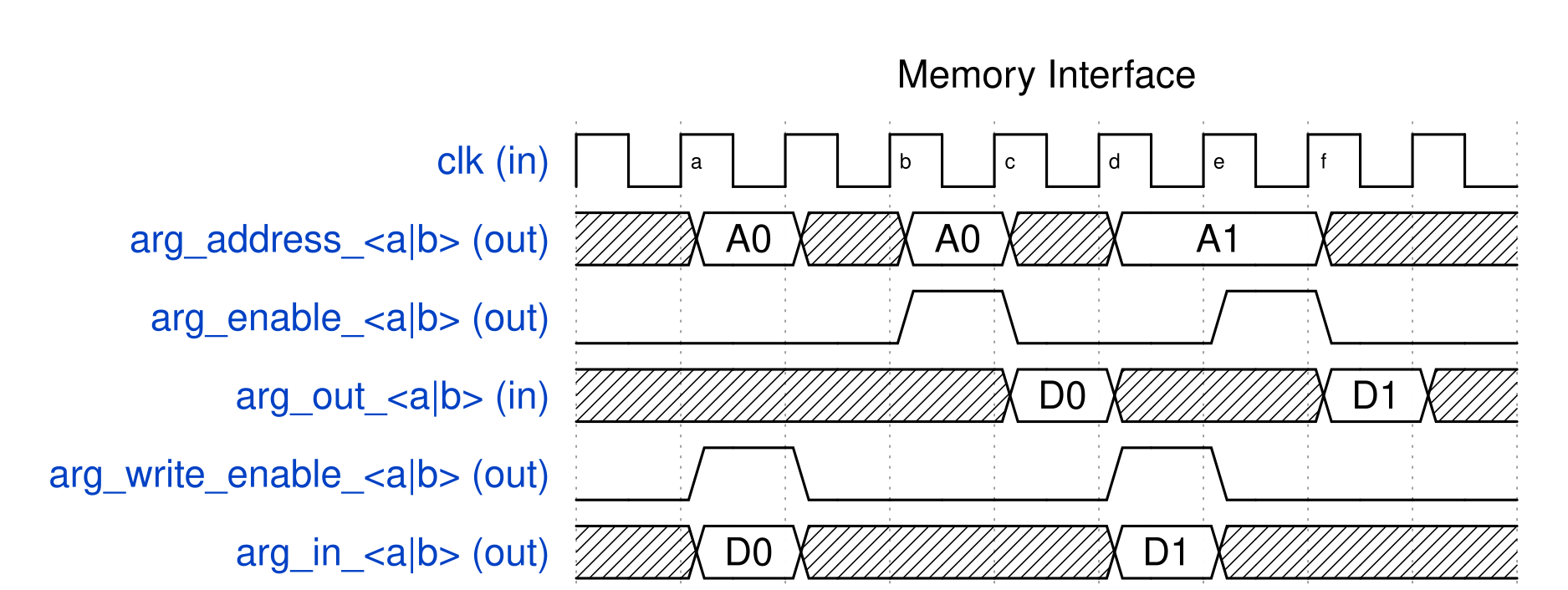

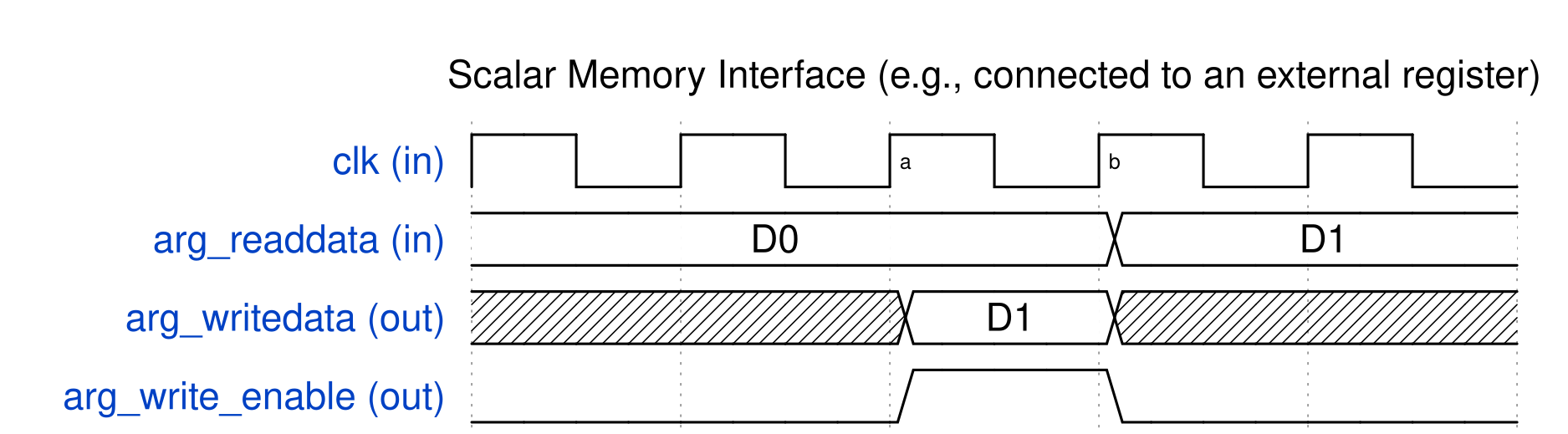

Any variables that are accessed by both software testbench and hardware functions will create an interface at the top-level module.

For example, if there is an array that is initialized in the software testbench and is used as an input to the hardware function, you may pass the array as an argument into the top-level function, which will create a memory interface for the array in the hardware core generated by LegUp.

Arguments into the top-level function can be constants, pointers, arrays, and FIFO data types.

The top-level function can also have a return value.

Please refer to the included example in the LegUp IDE, C++ Canny Edge Detection (SW/HW Co-Simulation), as a reference.

If a top-level argument is coming from a dynamically allocated array (e.g., malloc), the size of the array (in bytes) must be specified with our interface pragma (e.g., #pragma LEGUP interface argument(<arg_name>) depth(<int>)).

Please see the Configure Argument as Memory Interface for more details. The sizes of arrays that are statically allocated do not need to be specified with the pragma, as LegUp will automatically determine them.

For debugging purposes, LegUp converts any C printf

statements into Verilog $write statements so that values printed during

software execution will also be printed during hardware simulation. This

allows easy verification of the correctness of the hardware circuit. Verilog

$write statements are unsynthesizable and will not affect the final FPGA

hardware.

Limitations:

- When function pipelining is used, the top-level function cannot have array interfaces (array arguments or global arrays that are accessed from both SW testbench and HW functions).

2.7. Loop Pipelining¶

Loop pipelining is an optimization that can automatically extract loop-level parallelism to create an efficient hardware pipeline. It allows executing multiple loop iterations concurrently on the same pipelined hardware.

To use loop pipelining, the user needs to specify the loop pipeline pragma above the applicable loop:

#pragma LEGUP loop pipeline

for (i = 1; i < N; i++) {

a[i] = a[i-1] + 2

}

An important concept in loop pipelining is the initiation interval (II), which is the cycle interval between starting successive iterations of the loop. The best performance and hardware utilization is achieved when II=1, which means that successive iterations of the loop can begin every clock cycle. A pipelined loop with an II=2 means that successive iterations of the loop can begin every two clock cycles, corresponding to half of the throughput of an II=1 loop.

By default, LegUp always attempts to create a pipeline with an II=1. However, this is not possible in some cases due to resource constraints or cross-iteration dependencies. Please refer to Optimization Guide on more examples and details on loop pipelining. When II=1 cannot be met, LegUp’s pipeline scheduling algorithm will try to find the smallest possible II that satisfies the constraints and dependencies.

2.8. Multi-threading with Pthreads¶

In an FPGA hardware system, the same module can be instantiated multiple times to exploit spatial parallelism, where all module instances execute in parallel to achieve higher throughput. LegUp allows easily inferring such parallelism with the use of POSIX Threads (Pthreads), a standard multi-threaded programming paradigm that is commonly used in software. Parallelism described in software with Pthreads is automatically compiled to parallel hardware with LegUp. Each thread in software becomes an independent module that concurrently executes in hardware.

For example, the code snippet below creates N threads running the Foo function in software.

LegUp will correspondingly create N hardware instances all implementing the Foo function, and parallelize their executions.

LegUp also supports mutex and barrier APIs (from Pthreads library) so that synchronization between threads can be specified using locks and barriers.

void* Foo (int* arg);

for (i = 0; i < N; i++) {

pthread_create(&threads[i], NULL, Foo, &args[i]);

}

LegUp supports the most commonly used Pthread APIs, which are listed below in Supported Pthread APIs.

Note that for a Pthread kernel, LegUp will automatically inline any of its descendant functions.

The inlining cannot be overridden with the noinline pragma (see LegUp Pragmas Manual).

2.9. Supported Pthread APIs¶

LegUp currently supports the following Pthread functions:

| Pthread Functions |

|---|

| pthread_create |

| pthread_join |

| pthread_exit |

| pthread_mutex_lock |

| pthread_mutex_unlock |

| pthread_barrier_init |

| pthread_barrier_wait |

2.10. Data Flow Parallelism with Pthreads¶

Data flow parallelism is another commonly used technique to improve hardware throughput, where a succession of computational tasks that process continuous streams of data can execute in parallel. The concurrent execution of computational tasks can also be accurately described in software using Pthread APIs. In addition, the continuous streams of data flowing through the tasks can be inferred using LegUp’s built-in FIFO data structure (see Streaming Library).

Let’s take a look at the code snippet below, which is from the example project, “Fir Filter (Loop Pipelining with Pthreads)”, included in the LegUp IDE.

In the example, the main function contains the following code snippet:

// Create input and output FIFOs

legup::FIFO<int> input_fifo(/*depth*/ 2);

legup::FIFO<int> output_fifo(/*depth*/ 2);

// Build struct of FIFOs for the FIR thread.

struct thread_data data;

data.input = &input_fifo;

data.output = &output_fifo;

// Launch pthread kernels.

pthread_t thread_var_fir, thread_var_injector, thread_var_checker;

pthread_create(&thread_var_fir, NULL, FIRFilterStreaming, (void *)&data);

pthread_create(&thread_var_injector, NULL, test_input_injector, data.input);

pthread_create(&thread_var_checker, NULL, test_output_checker, data.output);

// Join threads.

pthread_join(thread_var_injector, NULL);

pthread_join(thread_var_checker, NULL);

The corresponding hardware is illustrated in the figure below.

The two legup::FIFO<int>s in the C++ code corresponds to the creation of the two FIFOs, where the bit-width is set according to the type shown in the constructor argument <int>.

The three pthread_create calls initiate and parallelize the executions of three computational tasks, where each task is passed in a FIFO (or a pointer to a struct containing more than one FIFO pointers) as its argument.

The FIFO connections and data flow directions are implied by the uses of FIFO read() and write() APIs.

For example, the test_input_injector function has a write() call writing data into the input_fifo, and the FIRFilterStreaming function uses a read() call to read data out from the input_fifo.

This means that the data flows through the input_fifo from test_input_injector to FIRFilterStreaming.

The pthread_join API is called to wait for the completion of test_input_injector and test_output_checker.

We do not “join” the FIRFilterStreaming thread since it contains an

infinite loop (see code below) that is always active and processes incoming

data from input_fifo whenever the FIFO is not empty.

This closely matches the always running behaviour of streaming hardware, where hardware is constantly running and processing data..

Now let’s take a look at the implementation of the main computational task (i.e., the FIRFilterStreaming threading function).

void *FIRFilterStreaming(void *threadArg) {

struct thread_data *arg = (struct thread_data *)threadArg;

legup::FIFO<int> *input_fifo = arg->input, *output_fifo = arg->output;

// This loop is pipelined and will be "always running", just like how a

// streaming module runs whenever a new input is available.

#pragma LEGUP loop pipeline

while (1) {

// Read from input FIFO.

int in = input_fifo->read();

static int previous[TAPS] = {0}; // Need to store the last TAPS - 1 samples.

const int coefficients[TAPS] = {0, 1, 2, 3, 4, 5, 6, 7,

8, 9, 10, 11, 12, 13, 14, 15};

int j = 0, temp = 0;

for (j = (TAPS - 1); j >= 1; j -= 1)

previous[j] = previous[j - 1];

previous[0] = in;

for (j = 0; j < TAPS; j++)

temp += previous[TAPS - j - 1] * coefficients[j];

int output = (previous[TAPS - 1] == 0) ? 0 : temp;

// Write to output FIFO.

output_fifo->write(output);

}

pthread_exit(NULL);

}

In the code shown in the example project, you will notice that all three threading functions contain a loop, which repeatedly reads and/or writes data from/to FIFOs to perform processing. In LegUp, this is how one can specify that functions are continuously processing data streams that are flowing through FIFOs.

2.10.1. Further Throughput Enhancement with Loop Pipelining¶

In this example, the throughput of the streaming circuit will be limited by how frequently the functions can start processing new data (i.e., how frequently the new loop iterations can be started).

For instance, if the slowest function among the three functions can only start a new loop iteration every 4 cycles, then the throughput of the entire streaming circuit will be limited to processing one piece of data every 4 cycles.

Therefore, as you may have guessed, we can further improve the circuit throughput by pipelining the loops in the three functions.

If you run LegUp synthesis for the example (Compile Software to Hardware), you should see in the Pipeline Result section of our report file, summary.legup.rpt, that all loops can be pipelined with an initiation interval of 1.

That means all functions can start a new iteration every clock cycle, and hence the entire streaming circuit can process one piece of data every clock cycle.

Now run the simulation (Simulate Hardware) to confirm our expected throughput. The reported cycle latency should be just slightly more than the number of data samples to be processed

(INPUTSIZE is set to 128; the extra cycles are spent on activating the parallel accelerators, flushing out the pipelines, and verifying the results).

2.11. Function Pipelining¶

You have just seen how an efficient streaming circuit can be described in software by using loop pipelining with Pthreads.

An alternative way to describe such a streaming circuit is to use Function Pipelining.

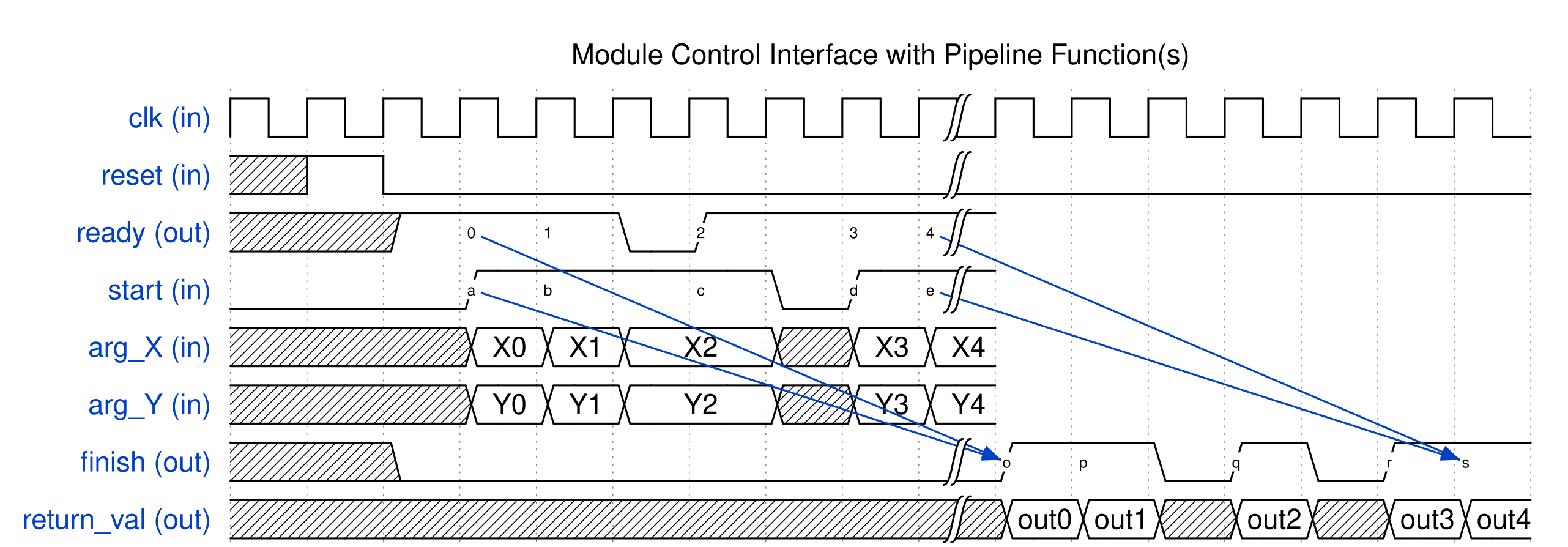

When a function is marked to be pipelined (by using the Pipeline Function constraint), LegUp will implement the function as a pipelined circuit that can start a new invocation every II cycles.

That is, the circuit can execute again while its previous invocation is still executing, allowing it to continuously process incoming data in a pipelined fashion.

This essentially has the same circuit behaviour as what was described in the previous example (loop pipelining with Pthreads) in the Data Flow Parallelism with Pthreads section, without having to write the software code using Pthreads.

This feature also allows multiple functions that are added to the Pipeline function constraint to execute in parallel, achieving the same hardware behaviour as the previous loop pipelining with Pthreads example.

When using this feature, the user-specified top-level function (see Specifying the Top-level Function) can only call functions that are specified to be function pipelined (e.g., the top-level function cannot call one function pipeline and one non-function pipeline). The top-level function cannot have any control flow (i.e., loops, if/else statements), and cannot perform any operations other than declaring variables (i.e., memories, FIFOs) and calling function pipelines.

For SW/HW co-simulation, the top-level function that calls one or more function pipelines can only have interfaces that are created from FIFOs and constant values (top-level interfaces are created from top-level function arguments and global variables that are accessed from both software testbench functions and hardware kernel functions).

Please refer to the C++ Canny Edge Detection (SW/HW Co-Simulation) example included in the LegUp IDE for an example of using function pipelining.

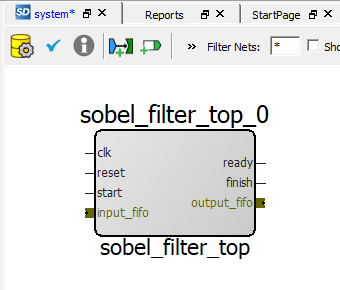

In this example, you should see the top-level function, canny, as below.

void canny(legup::FIFO<unsigned char> &input_fifo,

legup::FIFO<unsigned char> &output_fifo) {

#pragma LEGUP function top

legup::FIFO<unsigned char> output_fifo_gf(/* depth = */ 2);

legup::FIFO<unsigned short> output_fifo_sf(/* depth = */ 2);

legup::FIFO<unsigned char> output_fifo_nm(/* depth = */ 2);

gaussian_filter(input_fifo, output_fifo_gf);

sobel_filter(output_fifo_gf, output_fifo_sf);

nonmaximum_suppression(output_fifo_sf, output_fifo_nm);

hysteresis_filter(output_fifo_nm, output_fifo);

}

As shown above, the top-level function has been specified with #pragma LEGUP function top. The top-level function calls four functions, gaussian_filter, sobel_filter, nonmaximum_suppression, and hysteresis_filter, each of which are specified to be function pipelined (with #pragma LEGUP function pipeline).

The top-level arguments are input_fifo and output_fifo. The input_fifo is given as an argument into the first function, gaussian_filter, and gives the inputs into the overall circuit.

The output_fifo is given as an argument into the last function, hysteresis_filter, and receives the outputs of the overall circuit.

There are also intermediate FIFOs, output_fifo_gf, output_fifo_sf, and output_fifo_nm, which are given as arguments into the function pipelines and thus connect them (i.e., outputs of gaussian_filter is given as inputs to sobel_filter).

When synthesizing a top-level function with multiple pipelined sub-functions, LegUp will automatically parallelize the execution of all sub-functions that are called in the top-level function, forming a streaming circuit with data flow parallelism.

In this case gaussian_filter executes as soon as there is data in the input_fifo, and sobel_filter starts running as soon as there is data in the output_fifo_sf.

In other words, a function pipeline does not wait for its previous function pipeline to completely finish running before it starts to execute, but rather, it starts running as early as possible.

Each function pipeline also starts working on the next data while the previous data is being processed (in a pipelined fashion).

If the initiation interval (II) is 1, a function pipeline starts processing new data every clock cycle.

Once the function pipelines reach steady-state, all function pipelines execute concurrently.

This example showcases the synthesis of a streaming circuit that consists of a succession of concurrently executing pipelined functions.

Note

In the generated Verilog for a function pipelined hardware, the start input port serves as an enable signal to the circuit.

The circuit stops running when the start signal is de-asserted. To have the circuit running continuously, the start input port should be kept high.

2.12. Memory Partitioning¶

Memory Partitioning is an optimization where aggregate types such as arrays and structs are partitioned into smaller pieces allowing for a greater number of reads and writes (accesses) per cycle. LegUp instantiates a RAM for each aggregate type where each RAM has up to two ports (allowing up to two reads/writes per cycle). Partitioning aggregate types into smaller memories or into its individual elements allows for more accesses per cycle and improves memory bandwidth.

There are two flavors of memory partitioning, access-based partitioning and user-specified partitioning.

Access-based partitioning is automatically applied to all memories except for those at the top-level interfaces (I/O Memory). This flavor of memory partitioning will analyze the ranges of all accesses to a memory and create partitions based on these accesses. After analyzing all memory accesses, independent partitions will be implemented in independent memories. If two partitions overlap in what they access, they will be merged into one partition. If there are any sections of the memory that is not accessed, it will be discarded to reduce memory usage. For example, if there are two loops, where one loop accesses the first half of an array and the second loop accesses the second half of the array, the accesses to the array from the two loops are completely independent. In this case the array will be partitioned into two and be implemented in two memories, one that holds the first half of the array and another that holds the second half of the array. However, if both loops access the entire array, their accesses overlap, hence the two partitions will be merged into one and the array will just be implemented in a single memory (without being partitioned). Access-based partitioning is done automatically without needing any memory partition pragmas, in order to automatically improve memory bandwidth and reduce memory usage whenever possible.

User-specified partitioning can be achieved with the LEGUP memory partition pragma (see Partition Top-Level Interface and Partition Top-Level Interface).

User-specified partitioning partitions memories based on a user-specified dimension. Memories are then partitioned completely on the specified dimension,

which means the memory is partitioned into individual elements of the specified array dimension. More information on the pragmas can be found in the

pragma references linked above. Unaccessed sections of the original memory are also discarded.

Note

Accessing memory outside of an array dimension is not supported by memory partitioning and may cause incorrect circuit behavior. An example of this is casting a 2-d array to a pointer (1-d) and iterating through the entire array as 1-d.

2.12.1. An Access-Based Memory Partitioning Example¶

Access-based partitioning is automatically applied to all memories by LegUp except for interface memories (top-level function arguments and global variables accessed by both software testbench and hardware functions) to the top-level function. Interface memories need to be partitioned with the memory partition pragma. See the code snippet below that illustrate an example of accessed-based partitioning.

int array[8];

int result = 0;

...

#pragma unroll

for (i = 0; i < 8; i++) {

result += array[i]

}

In the example above, each iteration of the loop access an element of array and adds it to result. The unroll pragma is applied to completely unroll the loop.

Without partitioning, LegUp will implement this array in a RAM (with eight elements), where an FPGA RAM can have up to two read/write ports.

In this case, the loop will take four cycles, as eight reads are needed from the RAM and up to two reads can be performed per cycle with a two ported memory.

With access-based partitioning, the accesses to the above array will be analyzed. With unrolling, there will be eight load instructions, each of which will access a single array element, with no overlaps in accesses between the load instructions (i.e., the accesses of each load instruction are independent). This creates 8 partitions, with one array element in each partition. After partitioning, all eight reads can occur in the same clock cycle, as each memory will only need one memory access. Hence the entire loop can finish in a single cycle. With this example, we can see that memory partitioning can help to improve memory bandwidth and improve performance.

With access-based partitioning, LegUp outputs messages to the console specifying which memory has been partitioned into how many partitions, as shown below:

Info: Partitioning memory: array into 8 partitions.

Limitations:

- Accessing memory outside of an array dimension is not supported by memory partitioning and may cause incorrect circuit behavior. An example of this is lowering a 2-d array to a pointer and iterating through the size of the 2-d array.

- Pointers that alias to different memories or different sections of the same memory (e.g. a pointer that is assigned to multiple memories based on a condition) are not supported in memory partitioning. The aliased memories will not be partitioned.

Please refer to the Optimization Guide for more examples and details.

2.12.2. A User-Specified Memory Partitioning Example¶

User-specified partitioning is where the user explicitly specifies a memory to be partitioned via the memory partition pragma (#pragma LEGUP memory partition variable, #pragma LEGUP memory partition argument).

User-specified partitioning also analyzes accesses but partitions based on a predefined structure and array dimension.

#pragma LEGUP memory partition variable(array)

int array[8];

int result = 0;

...

for (i = 0; i < 8; i++) {

result += array[i]

}

The example above shows the same example that was shown for access-based partitioning, however, the loop is not unrolled in this case. Access-based partitioning will try to partition the array but will only find one load instruction in the loop that accesses the entire array. This preventing access-based partitioning as all eight accesses come from the same load instruction.

User-specified partitioning can be used to force partitioning of this array with a predefined structure. In the example above, the memory partition pragma specifies the array to be partitioned completely into individual elements. After partitioning, the array will be partitioned into eight individual elements just like with the access-based partitioning example above. The benefit in this case is that the loop does not have to be unrolled, which can be useful in cases like when the loop is pipelined and cannot be unrolled (see Loop Pipelining).

// partitioned completely up to DIM1 from left to right

#pragma LEGUP memory partition variable(array3d) type(complete) dim(1)

int array3d[DIM2][DIM1][DIM0];

The memory partition pragma has optional arguments type and dim that specifies the partition type and dimension to be partitioned

up to, respectively. The default type is complete which means to partition the array into individual elements, and the default dimension

is 0 which means to partition up to the right-most dimension. The type can also specified to be none to prevent partitioning for a

specific memory. The dimension provided specifies the dimension to be partitioned up to, with the resulting partitions being elements of

that array dimension. For example, in the above code snippet array3d is specified to be partitioned up dimension 1, which means array

dimensions corresponding to DIM2 and DIM1 will be completely partitioned to produce DIM2``x``DIM1 partitions of int[DIM0].

Lower numbered dimensions correspond to right-ward dimensions of the array and higher numbered dimensions correspond to left-ward dimensions

of the array, as shown by the DIMX macros specifying the sizes of the dimensions of array3d.

With user-specified partitioning, LegUp outputs messages to the console stating the variable set to be partitioned and its settings. LegUp also outputs messages specifying if a memory has been partitioned and into how many partitions. If a memory is specified to be partitioned but cannot be partitioned, LegUp will output a warning.

Info: Found user-specified memory: "array" on line 6 of test.c, with partition type: Complete, partition dimension: 0.

Info: Found user-specified memory: "array3d" on line 27 of test.c, with partition type: Complete, partition dimension: 1.

Warning: The user-specified memory "array3d" on line 27 of test.c could not be partitioned because a loop variable indexing into a multi-dimenional array comes from a loop variable and goes out of the array dimension bounds. Going outside of array dimension bounds is not supported for memory partitioning.

Info: Partitioning memory: array into 8 partitions.

Limitations:

- Accessing memory outside of an array dimension is not supported by memory partitioning and will sometimes cause incorrect circuit behavior. An example of this is lowering a 2-d array to a pointer and iterating through the size of the 2-d array.

- Pointers that alias to different memories or different sections of the same memory (e.g. a pointer that is assigned to multiple memories based on a condition) are not supported in memory partitioning. The aliased memories will not be partitioned. The exception to this is that functions that get called with different pointers are handled properly for user-specified partitioning.

Please refer to the Optimization Guide for more examples and details.

2.13. LegUp C++ Library¶

LegUp includes a number of C++ libraries that allow creation of efficient hardware.

2.13.1. Streaming Library¶

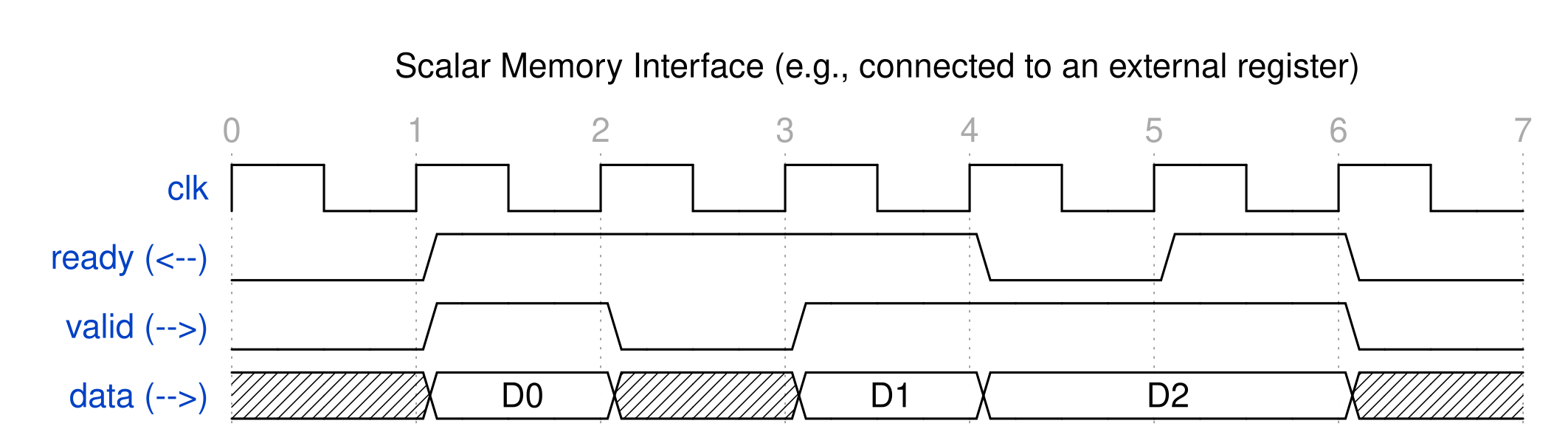

The streaming library includes the FIFO (first-in first-out) data structure along with its associated API functions. The library can be compiled in software to run on the host machine (e.g., x86). Each FIFO instance in software is implemented as a First Word Fall Through (FWFT) FIFO in hardware.

The FIFO library is provided as a C++ template class. The FIFO data type can be flexibly defined and specified as a template argument of the FIFO object. For example, the FIFO data type could be defined as a struct containing multiple integers:

struct AxisWord { ap_uint<64> data; ap_uint<8> keep; ap_uint<1> last; };

legup::FIFO<AxisWord> my_axi_stream_interface_fifo;

Note

A valid data type could be any of the 1) C/C++ primitive integer types, 2) LegUp’s C++ Arbitrary Precision Data Types Library (ap_int, ap_uint, ap_fixpt, ap_ufixpt), or 3) a struct containing primitive integer types or LegUp’s C++ arbitrary Precision Data Types. In the case of a struct type, it is prohibited to use ‘ready’ or ‘valid’ as the name of a struct field. This is because in the generated Verilog, a FIFO object will introduce an AXI-stream interface associated with valid/ready handshaking signals and the names will overlap.

You can use the C++ streaming library by including the header file:

#include "legup/streaming.hpp"

Note

Users should always use the APIs below to create and access FIFOs. Any other uses of FIFOs are not supported in LegUp.

An example code for using the streaming library is shown below.

// declare a 32-bit wide fifo

legup::FIFO<unsigned> my_fifo;

// set the fifo's depth to 10

my_fifo.setDepth(10);

// write to the fifo

my_fifo.write(data);

// read from the fifo

MyStructT data = my_fifo.read();

// check if fifo is empty

bool is_empty = my_fifo.empty();

// check if the fifo is full

bool is_full = my_fifo.full();

// get the number of words stored in the fifo

unsigned numWords = my_fifo.get_usedw();

// declare a 32-bit wide fifo with a depth of 10

legup::FIFO<unsigned> my_fifo_depth_10(10);

As shown above, there are two ways of creating a FIFO (legup::FIFO<unsigned> my_fifo and legup::FIFO<unsigned> my_fifo_depth_10(10)).

The width of the FIFO is determined based on the templated data type of the FIFO.

For example, FIFO<unsigned> my_fifo creates a FIFO that is 32 bits wide.

The FIFO’s data type can be any primitive type or arbitrary bitwidth types (ap_int/ap_uint/ap_fixpt/ap_ufixpt),

or a struct of primitive/arbitrary bitwidth types (or nested structs of those types) but

cannot be a pointer or an array (or a struct with a pointer/array).

An array or a struct of FIFOs is supported.

The depth of the FIFO can be provided by the user as a constructor argument

when the FIFO is declared, or it can also be set afterwards with the setDepth(unsigned depth) function.

If the depth is not provided by the user, LegUp uses a default FIFO depth of 2.

The depth of a FIFO can also be set to 0, in which case LegUp will create direct ready/valid/data wire connections (without a FIFO) between the source and the sink.

2.13.1.1. Streaming Library - Blocking Behaviour¶

Note that the fifo read() and write() calls are blocking.

Hence if a module attempts to read from a FIFO that is empty, it will be stalled.

Similarly, if it attempts to write to a FIFO that is full, it will be stalled.

If you want non-blocking behaviour, you can check if the FIFO is

empty (with empty()) before calling read(), and likewise, check

if the FIFO is full (with full()) before calling write() (see Streaming Library - Non-Blocking Behaviour).

With the blocking behaviour, if the depths of FIFOs are not sized properly, it can cause a deadlock. LegUp prints out messages to alert the user that a FIFO is causing stalls.

In hardware simulation, the following messages are shown.

Warning: fifo_write() has been stalled for 1000000 cycles due to FIFO being full.

Warning: fifo_read() has been stalled for 1000000 cycles due to FIFO being empty.

Warning: fifo_read() has been stalled for 1000000 cycles due to FIFO being empty.

Warning: fifo_write() has been stalled for 1000000 cycles due to FIFO being full.

Warning: fifo_read() has been stalled for 1000000 cycles due to FIFO being empty.

Warning: fifo_read() has been stalled for 1000000 cycles due to FIFO being empty.

If you continue to see these messages, you can suspect that there may be a deadlock. In this case, we recommend making sure there is no blocking read from an empty FIFO or blocking write to a full FIFO, and potentially increasing the depth of the FIFOs.

Note

We recommend the minimum depth of a FIFO to be 2, as a depth of 1 FIFO can cause excessive stalls.

2.13.1.2. Streaming Library - Non-Blocking Behaviour¶

As mentioned above, non-blocking FIFO behaviour can be created with the use of empty() and full() functions.

Non-blocking FIFO read and write can be achieved as shown below.

if (!fifo_a.empty())

unsigned data_in = fifo_a.read();

if (!fifo_b.full())

fifo_b.write(data_out);

Note

A deadlock may occur if a fifo with a depth of 0 uses non-blocking write on its source and non-block read on its sink.

2.13.2. C++ Arbitrary Precision Data Types Library¶

The C++ Arbitrary Precision Data Types Library provides numeric types ap_[u]int and ap_[u]fixpt which can be used to specify data types of arbitrary bitwidths (i.e., ap_int<9> for a 9-bit integer data) in software,

which is efficiently translated to create hardware for that exact width. It also provides bit selection and concatenation utilities for bit-level access to data.

2.13.3. C++ Arbitrary Precision Integer Library¶

The C++ ap_[u]int type allows specifying signed and unsigned data types of any bitwidth.

They can be used for arithmetic, concatenation, and bit level operations. You can use the ap_[u]int type

by including the following header file.

#include "legup/ap_int.hpp"

The desired width of the ap_[u]int can be specified as a template parameter, ap_[u]int<W>,

allowing for wider types than the existing C arbitrary bit-width library.

An example using the C++ library is shown below.

#include "legup/ap_int.hpp"

#include <iostream>

using namespace legup;

int main() {

ap_uint<128> data("0123456789ABCDEF0123456789ABCDEF");

ap_int<4> res(0);

for (ap_uint<8> i = 0; i < data.length(); i += 4) {

// If this four bit range of data is <= 7

if (data(i + 3, i) <= 7) {

res -= 1;

} else {

res += 1;

}

}

// iostream doesn't synthesize to hardware, so only include this

// line in software compilation. Any block surrounded by this ifdef

// will be ignored when compiling to hardware.

#ifdef LEGUP_SW

std::cout << res << std::endl;

#endif

}

In the above code we iterate through a 128 bit unsigned integer in four bit segments, and track the difference between how many segments are above and below 7. All variables have been reduced to their specified minimum widths.

2.13.3.1. Printing Arbitrary Precision integers¶

The C++ Arbitrary Precision Integer Library provides some utilities for printing ap_[u]int types. The to_string(base, signedness) function

takes an optional base argument (one of 2, 10, and 16) which defaults to 16, as well as an optional signedness argument which determines if the data

should be printed as signed or unsigned, which defaults to false. The output stream operator << is also overloaded to put arbitrary precision integer

types in the output stream as if they were called with the default to_string arguments.

Some example code using these utilities is shown below.

#include "legup/ap_int.hpp"

#include <stdio.h>

#include <iostream.h>

using namespace legup;

using namespace std;

...

ap_uint<8> ap_u = 21;

ap_int<8> ap = -22;

// prints: 0x15

cout << "0x" << ap_u << endl;

// prints: -22

printf("%s\n", ap.to_string(10, true));

// prints: 234

printf("%s\n", ap.to_string(10));

// prints 00010101

printf("%s\n", ap_u.to_string(2));

2.13.3.2. Initializing Arbitrary Precision integers¶

The ap_[u]int types can be constructed and assigned to from other arbitrary precision integers, C++ integral

types, ap_[u]fixpt types, as well as concatenations and bit selections. They can also be initialized from a hexadecimal

string describing the exact bits.

Some examples of initializing arbitrary precision integer types are show below.

#include "legup/ap_int.hpp"

#include "legup/ap_fixpt.hpp"

using namespace legup;

...

// Initialized to -7

ap_int<4> int1 = -7;

// Initialized to 15

// The bits below the decimal are truncated.

ap_uint<4> int2 = ap_ufixpt<5, 4, AP_RND, AP_SAT>(15.5);

// Initialized to 132

// Could also write "0x84"

// The 0x is optional

ap_uint<8> int3("84");

// Initialized to 4

// Bit selections are zero extended to match widths

ap_int<4> int4 = int3(2, 0);

// Initialized to 128

// ap_uint types are zero extended to match widths

// ap_int types are sign extended to match widths

ap_int<16> int5 = ap_uint<8>("80");

// Initialized to 2

// The value 4098 (= 4096 + 2) is wrapped to 2

ap_uint<12> int6 = 4098;

2.13.3.3. C++ Arbitrary Precision Integer Arithmetic¶

The C++ Arbitrary Precision Integer library supports all standard arithmetic, logical bitwise, shifts, and comparison operations. Note that for shifting that >> and << are logical, and the .ashr(x) function implements arithmetic right shift. The output types of an operation are wider than their operands as necessary to hold the result. Operands of ap_int, and ap_uint type, as well as operands of different widths can be mixed freely. By default ap_int will be sign extended to the appropriate width for an operation, while ap_uint will be zero extended. When mixing ap_int and ap_uint in an arithmetic operation the resulting type will always be ap_int. Some of this behaviour is demonstrated in the example below.

#include "legup/ap_int.hpp"

using namespace legup;

...

ap_int<8> a = 7;

ap_int<12> b = 100;

ap_uint<7> c = 3;

// Multiply expands to the sum of a and b's width

ap_int<20> d = a * b;

// Add result in max of widths + 1

ap_int<13> e = a + b;

// Logical bitwise ops result in max of widths

ap_int<12> f = a & b;

// Mixing ap_int and ap_uint results in ap_int

ap_int<9> g = a + c;

// ap_(u)int types can be mixed freely with integral types

ap_int<33> h = -1 - a;

2.13.3.4. C++ Arbitrary Precision Integer Explicit Conversions¶

The ap_[u]int types support several explicit conversion functions which allow the value to be interpreted in different ways.

The to_uint64() function will return a 64 bit unsigned long long with the same bits as the original ap_[u]int, zero extending

and wrapping as necessary. Assigning an ap_[u]int wider than 64 bits to an unsigned long long would also wrap to match widths,

without needing to call to_uint64(). The to_int64() function will return a 64 bit signed long long and will sign extend as necessary.

An arbitrary precision integer data type can be casted to an arbitrary precision fixed-point data type with the to_fixpt<I_W>() and to_ufixpt<I_W>() functions (returns ap_fixpt<W, I_W> and ap_ufixpt<W, I_W> types respectively), with the same bits as the original ap_[u]int<W>.

For more on the ap_[u]fixpt template, please refer to the C++ Arbitrary Precision Fixed Point Library section.

An example demonstrating these functions is shown below.

#include "legup/ap_int.hpp"

#include "legup/ap_fixpt.hpp"

using namespace legup;

...

// zero extend 16 bit -32768 to 64 bit 32768

unsigned long long A = ap_int<16>(-32768).to_uint64();

// wrap from 65 bit 2**64 + 1 to 64 bit 1

unsigned long long B = ap_uint<65>("10000000000000001").to_uint64();

// interpret 8 bit uint as 8 bit ufixpt with four bits above decimal

// by value 248 becomes 15.5 (== 248 / 2**4)

ap_ufixpt<8, 4> C = ap_uint<8>(248).to_ufixpt<4>();

// interpret 4 bit int as 4 bit fixpt with leading bit 8 bits above decimal

// by value -8 becomes -128 (== -8 * 2**4)

ap_fixpt<4, 8> D = ap_int<4>(-8).to_fixpt<8>();

// interpret 6 bit int as 6 bit ufixpt with 6 bits above decimal

// by value 8 becomes 8

ap_ufixpt<6, 6> E = ap_int<6>(8).to_ufixpt<6>();

2.13.4. C++ Arbitrary Precision Bit-level Operations¶

The C++ Arbitrary Precision Library provides utilities to select, and update ranges of arbitrary precision data, as well as perform concatenation.

Bit selection and updating is defined for all C++ arbitrary precision numeric types. Concatenation is defined on all C++ Arbitrary Precision Library constructs including arbitrary precision numeric types, as well as bit selections, and other concatenations.

2.13.4.1. Selecting and Assigning to a Range of Bits¶

#include "legup/ap_int.hpp"

using namespace legup;

...

ap_uint<8> A(0xBC);

ap_int<4> B = A(7, 4); // B initialized as 0xB

ap_int<4> C = A[2]; // C initialized as 0x1

// A[2] is zero extended to match widths

A(3,0) = 0xA; // A becomes 0xBA

On C++ arbitrary precision types num(a, b) will select and create a reference to the underlying arbitrary precision value. The operator num[a] selects and creates a reference to a single bit. This reference can be assigned to, and used to access the underlying data.

2.13.4.2. Bit Concatenation¶

#include "legup/ap_int.hpp"

using namespace legup;

...

ap_uint<4> A(0xA);

ap_uint<8> B(0xCB);

ap_uint<8> AB( (A, B(3,0)) ); // AB initialized as 0xAB

ap_uint<12> ABC( (A, ap_uint<4>(0xB), B(7,4)) ); // ABC initialized as 0xABC

Putting any C++ arbitrary precision types in a comma separated list will generate a concatenation. The concatenation can currently be used to create arbitrary precision types (zero extending or truncating to match widths), but can not be assigned to.

2.13.5. C++ Arbitrary Precision Fixed Point Library¶

The C++ Arbitrary Precision Fixed Point library provides fast bit accurate software simulation, and efficient equivalent

hardware generation. The C++ ap_[u]fixpt types allow specifying signed and unsigned fixed point numbers of arbitrary width,

and arbitrary fixed position relative to the decimal. They can be used

for arithmetic, concatenation, and bit level operations. You can use the ap_[u]fixpt type by including the

following header file.

#include "legup/ap_fixpt.hpp"

The ap_[u]fixpt template allows specifying the width of the type, how far the most significant bit is above the decimal,

as well as several quantization and overflow modes.

Quantization and overflow handling is triggered during assignment and construction. The policies used for quantization and overflow are based on the quantization and overflow modes of the left hand side of an assignment, or of the value being constructed.

The template ap_[u]fixpt<W, I_W, Q_M, O_M> is described in the following table. The last two template parameters are optional.

| Parameter | Description | |

|---|---|---|

| W | The width of the word in bits. | |

| I_W | How far the most significant bit is above the decimal. I_W can be negative. I_W > 0 implies the MSB is above the decimal. I_W <= 0 implies the MSB is below the decimal. If W >= I_W >= 0 then I_W is the number of bits used for the integer portion. |

|

| Q_M | The Quantization (rounding) mode used when a result has precision below the least significant bit. Defaults to AP_TRN. |

|

| AP_TRN | Truncate bits below the LSB bringing the result closer to -∞. | |

| AP_TRN_ZERO | Truncate bits below the LSB bringing the result closer to zero. | |

| AP_RND | Round to the nearest representable value with the midpoint going towards +∞. | |

| AP_RND_INF | Round to the nearest representable value with the midpoint going towards -∞ for negative numbers, and +∞ for positive numbers. | |

| AP_RND_MIN_INF | Round to the nearest representable value with the midpoint going towards -∞. | |

| AP_RND_CONV | Round to the nearest representable value with the midpoint going towards the nearest even multiple of the quantum. (This helps to remove bias in rounding). | |

| O_M | The Overflow mode used when a result exceeds the maximum or minimum representable value. Defaults to AP_WRAP. |

|

| AP_WRAP | Wraparound between the minimum and maximum representable values in the range. | |

| AP_SAT | On positive and negative overflow saturate the result to the maximum or minimum value in the range respectively. | |

| AP_SAT_ZERO | On any overflow set the result to zero. | |

| AP_SAT_SYM | On positive and negative overflow saturate the result to the maximum or minimum value in the range symmetrically about zero. For ap_ufixpt this is the same as AP_SAT. |

|

An ap_[u]fixpt is a W bit wide integer, in 2’s complement for the signed case, which

has some fixed position relative to the decimal. This means that arithmetic is efficiently

implemented as integer operations with some shifting to line up decimals. Generally a

fixed point number can be thought of as a signed or unsigned integer word multiplied by 2^(I_W - W).

The range of values that an ap_[u]fixpt can take on, as well as the quantum that

separates those values is determined by the W, and I_W template parameters. The AP_SAT_SYM

overflow mode forces the range to be symmetrical about zero for signed fixed point types.

This information is described in the following table. Q here represents the quantum.

| Type | Quantum | Range | AP_SAT_SYM Range |

| ap_ufixpt | 2^(I_W - W) | 0 to 2^(I_W) - Q |

0 to 2^(I_W) - Q |

| ap_fixpt | 2^(I_W - W) | -2^(I_W - 1) to 2^(I_W - 1) - Q |

-2^(I_W - 1) + Q to 2^(I_W - 1) - Q |

Some ap_[u]fixpt ranges are demonstrated in the following table.

| Type | Quantum | Range |

| ap_fixpt<8, 4> | 0.0625 | -8 to 7.9375 |

| ap_ufixpt<4, 12> | 256 | 0 to 3840 |

| ap_ufixpt<4, -2> | 0.015625 | 0 to 0.234375 |

An example using ap_fixpt is show below.

#include "legup/ap_fixpt.hpp"

#include "legup/streaming.hpp"

#define TAPS 8

// A signed fixed point type with 10 integer bits and 6 fractional bits

// It employs convergent rounding for quantization, and saturation for overflow.

typedef legup::ap_fixpt<16, 10, legup::AP_RND_CONV, legup::AP_SAT> fixpt_t;

// A signed fixed point type with 3 integer bits and 1 fractional bit

// It uses the default truncation, and wrapping modes.

typedef legup::ap_fixpt<4, 3> fixpt_s_t;

// This function is marked function_pipeline in the config

void fir(legup::FIFO<fixpt_t> &input_fifo,

legup::FIFO<fixpt_t> &output_fifo) {

fixpt_t in = input_fifo.read();

static fixpt_t previous[TAPS] = {0};

const fixpt_s_t coefficients[TAPS] = {-2, -1.5, -1, -0.5, 0.5, 1, 1.5, 2};

for (unsigned i = (TAPS - 1); i > 0; --i) {

previous[i] = previous[i - 1];

}

previous[0] = in;

fixpt_t accumulate[TAPS];

for (unsigned i = 0; i < TAPS; ++i) {

accumulate[i] = previous[i] * coefficients[i];

}

// Accumulate results, doing adds and saturation in

// a binary tree to reduce the number of serial saturation

// checks. This significantly improves pipelining results

// over serially adding results together when saturation

// is required.

for (unsigned i = TAPS >> 1; i > 0; i >>= 1) {

for (unsigned j = 0; j < i; ++j) {

accumulate[j] += accumulate[j + i];

}

}

output_fifo.write(accumulate[0]);

}

This example implements a streaming FIR filter with 8 taps. Using the minimum width ap_fixpt to represent

the constant coefficients allows the multiply to happen at a smaller width than if they were the same (wider)

type as the inputs. This example ensures that no overflows occur by always assigning to an ap_fixpt that uses the AP_SAT

overflow mode. This does incur a performance penalty, but this is minimized here by accumulating the results in a binary

fashion, such that there are only log(TAPS) = 3 saturating operations that depend on each other. If the results were

accumulated in a single variable in one loop then there would be TAPS = 8 saturating operations depending on each other.

Having more saturating operations in a row is slower because at each step overflow needs to be checked before the next

operation can occur.

2.13.5.1. Printing ap_[u]fixpt Types¶

The Arbitrary Precision Fixed Point Library provides some utilities for printing ap_[u]fixpt types in software, demonstrated below.

The to_fixpt_string(base, signedness) function takes an optional base argument which is one of 2, 10, or 16, and defaults to 10,

as well as an optional signedness argument which determines if the data should be treated as signed or unsigned. The signedness argument defaults to false

for ap_ufixpt, and true for ap_fixpt.

The output stream operator << can be used to put a fixed point number into an output stream

as if it were called with the default to_fixpt_string arguments.

The to_double() function can be useful for printing, but it can lose precision over a wide fixed point. It can be used

in hardware, but this is expensive, and should be avoided when possible.

#include "legup/ap_fixpt.hpp"

#include <stdio.h>

#include <iostream>

using namespace legup;

using namespace std;

...

ap_ufixpt<8, 4> fixed = 12.75;

ap_fixpt<8, 4> s_fixed("CC");

// prints: -52 * 2^-4

// Read -52 * 0.0625 = -3.25

cout << s_fixed << endl;

// prints: 11001100 * 2^-4

// Read unsigned 11001100 * 2^-4 = 204 * 0.0625

// = 12.75

printf("%s\n", fixed.to_fixpt_string(2).c_str());

// prints: CC * 2^-4

// Read signed CC * 2^-4 = -52 * 0.0625

// = -3.25

printf("%s\n", s_fixed.to_fixpt_string(16, false));

// prints: -3.25

printf("%.2f\n", s_fixed.to_double());

2.13.5.2. Initializing ap_[u]fixpt Types¶

The ap_[u]fixpt types can be constructed and assigned from other fixed points, the ap_[u]int types, C++ integer and floating

point types, as well as concatenations and bit selections. They can also be initialized from a hexadecimal string describing the exact

bits. Note that construction and assignment will always trigger the quantization and overflow handling of the ap_[u]fixpt being constructed or assigned to,

except when copying from the exact same type, or initializing from a hexadecimal string. For logical assignments of bits, bit selection assignments can be used, as well as

the from_raw_bits function, or the ap_[u]int to_fixpt<I_W>() functions in the case of ap_[u]int types.

Note

Initializing ap_[u]fixpt types from floating point types in hardware is expensive, and should be avoided when possible. However, initializing

ap_[u]fixpt from floating point literals is free, and happens at compile time.

Some examples of initializing fixed point types are shown in the following code snippet.

#include "legup/ap_fixpt.hpp"

#include "legup/ap_int.hpp"

using namespace legup;

...

// Initialized to -13.75

ap_fixpt<8, 4> fixed1 = -13.75;

// Initialized to 135

ap_ufixpt<8, 8> fixed2 = 135;

// Initialized to -112

// Could also write "0x9"

// 0x is optional

ap_fixpt<4, 8> fixed3("9");

// Initialized to 14

ap_ufixpt<10, 4> fixed4 = ap_uint<16>(14);

// Initialized to -1 (AP_SAT triggered)

ap_fixpt<4, 1, AP_TRN, AP_SAT> fixed5 = -4;

// Initialized to 1.5 (AP_RND triggered)

ap_ufixpt<4, 3, AP_RND> fixed6 = 1.25;

// Initialized to 15.75 from a logical string of bits

ap_ufixpt<8, 4> fixed7;

fixed7(7, 0) = ap_uint<8>("FC");

// Assign an existing ap_uint variable to an ap_ufixpt variable

ap_ufixpt<8, 4> fixed8;

fixed8(7, 0) = ap_uint_var;

// Initialize to 13 from a logical string of bits

ap_fixpt<6, 5> fixed9;

fixed9.from_raw_bits(ap_uint<6>(26));

// Initialize to -32 from a logical string of bits

// (First convert ap_uint<4> to ap_fixpt<4, 6> logically,

// then perform fixed point assignment)

ap_fixpt<1, 6> fixed10 = ap_uint<4>("8").to_fixpt<6>();

// Initialize to 32 from a logical string of bits

// (First convert ap_int<4> to ap_ufixpt<4, 6> logically,

// then perform fixed point assignment)

ap_ufixpt<1, 6> fixed11 = ap_int<4>("8").to_ufixpt<6>();

2.13.5.3. Arithmetic With ap_[u]fixpt Types¶

The Arbitrary Precision Fixed Point library supports all standard arithmetic, logical bitwise, shifts, and comparison

operations. During arithmetic intermediate results are kept in a wide enough type to hold all of the possible resulting values. Operands

are shifted to line up decimal points, and sign or zero extended to match widths before an operation is performed. For fixed

point arithmetic, whenever the result of a calculation can be negative the intermediate type is an ap_fixpt instead of ap_ufixpt

regardless of whether any of the operands were ap_fixpt.

Overflow and quantization handling only happen when the result is assigned to a fixed point type.

Note

Overflow and quantization handling is not performed for any assigning shifting operations (<<=, >>=) on ap_[u]fixpt types.

Also, non-assigning shifts (<<, >>, .ashr(x)) do not change the width or type of the fixed point they are applied to. This means that bits can be shifted out of

range.

Fixed point types can be mixed freely with other arbitrary precision and c++ numeric types for arithmetic, logical bitwise, and comparison operations, with some caveats for floating point types.

Note

For arithmetic and logical bitwise operations floating point types must be explicitly cast to an ap_[u]fixpt type before

being used, because of the wide range of possible values the floating point type could represent. It is also a good idea, but not required, to

use ap_[u]int types in place of C++ integers when less width is required.

Note

For convenience floating point types can be used directly in fixed point comparisons, however floating points are truncated

and wrapped as if they were assigned to a signed ap_fixpt just big enough to hold all values of the ap_[u]fixpt

type being compared against, with the AP_TRN and AP_WRAP modes on.

An example demonstrating some of this behaviour is show below.

#include "legup/ap_fixpt.hpp"

using namespace legup;

...

ap_ufixpt<65, 14> a = 32.5714285713620483875274658203125;

ap_ufixpt<15, 15> b = 7;

ap_fixpt<8, 4> c = -3.125;

// the resulting type is wide enough to hold all

// 51 fractional bits of a, and 15 integer bits of b

// the width, and integer width are increased by 1 to hold

// all possible results of the addition

ap_ufixpt<67, 16> d = a + b; // 39.5714285713620483875274658203125

// the resulting type is a signed fixed point

// with width, and integer width that are the sum

// of the two operands' widths

ap_fixpt<23, 19> e = b * c; // -21.875

// Assignment triggers the AP_TRN_ZERO quantization mode

ap_fixpt<8, 7, AP_TRN_ZERO> f = e; // -21.5

// Mask out bits above the decimal

f &= 0xFF; // -22

// Assignment triggers the AP_SAT overflow mode,

// and saturates the negative result to 0

ap_ufixpt<8, 4, AP_TRN, AP_SAT> g = b * d; // 0

2.13.5.4. Explicit Conversions of ap_[u]fixpt¶

There are several functions to explicitly convert ap_[u]fixpt types into other types, besides value based assignments. The raw_bits function produces a uint of

the same width as the ap_[u]fixpt with the same raw data, and to_double returns a double representing the value of the ap_[u]fixpt. Note that

for wide enough ap_[u]fixpt to_double can lose precision, and can be inefficient in hardware. These are demonstrated in the

following code snippet.

#include "legup/ap_fixpt.hpp"

using namespace legup;

...

ap_fixpt<12, 5> fixed("898");

ap_uint<12> logical_fixed = fixed.raw_bits();

logical_fixed == 0x898; // true

double double_fixed = fixed.to_double();

double_fixed == -14.8125; // true

2.13.6. Supported Operations in ap_[u]int, ap_[u]fixpt, and floating-point¶

The table below shows all the standard arithmetic operations that are supported in our Arbitrary Precision Integer and Fixed Point Libraries as well as for floating-point data types. It also shows some useful APIs that can be used to convert from one type to another or to convert to standard integral types or strings.

| Type | Operator | Description | ap_[u]int | ap_[u]fixpt | floating |

| Arithmetic | + | Addition | Y | Y | Y |

| - | Subtraction | Y | Y | Y | |

| * | Multiplication | Y | Y | Y | |

| / | Division | Y | Y | Y | |

| % | Modulo | Y | Y | Note Below | |

| ++ | Increment | Y | Y | Y | |

| – | Decrement | Y | Y | Y | |

| Assignment | = | Assignment | Y | Y | Y |

| += | Add and assign | Y | Y | Y | |

| -= | Sub and assign | Y | Y | Y | |

| *= | Mult and assign | Y | Y | Y | |

| /= | Div and assign | Y | Y | Y | |

| %= | Mod and assign | Y | Y | Note Below | |

| &= | bitwise AND and assign | Y | Y | N/A | |

| |= | Bitwise OR and assign | Y | Y | N/A | |

| ^= | Bitwise XOR and assign | Y | Y | N/A | |

| >>= | SHR and assign | Y | Y | N/A | |

| <<= | SHL and assign | Y | Y | N/A | |

| Comparison | == | Equal to | Y | Y | Y |

| != | Not equal to | Y | Y | Y | |

| > | Greater than | Y | Y | Y | |

| < | Less than | Y | Y | Y | |

| >= | Greater than or equal to | Y | Y | Y | |

| <= | Less than or equal to | Y | Y | Y | |

| Bitwise | & | Bitwise AND | Y | Y | N/A |

| ^ | Bitwise XOR | Y | Y | N/A | |

| | | Bitwise OR | Y | Y | N/A | |

| ~ | Bitwise Not | Y | Y | N/A | |

| .or_reduce() | Bitwise OR reduction | Y | Y | N/A | |

| Shift | << | Shift left | Y | Y | N/A |

| >> | Shift right | Y | Y | N/A | |

|

Arithmetic shift right | Y | Y | N/A | |

| Bit level access | num(a, b) | Range selection | Y | Y | N/A |

| num[a] | Bit selection | Y | Y | N/A | |

|

Concat | Y | Y | N/A | |

| Explicit Conversion | .to_ufixpt() | Convert to ap_ufixpt | Y | N/A | N/A |

| .to_fixpt() | Convert to ap_fixt | Y | N/A | N/A | |

| .to_uint64() | Convert to uint64 | Y | N/A | N/A | |

| .to_int64() | Convert to int64 | Y | N/A | N/A | |

| .raw_bits() | Convert to raw bits | N/A | Y | N/A | |

| .from_raw _bits() | Convert from raw bits | N/A | Y | N/A | |

| .to_double() | Convert to double | N/A | Y | N/A | |

| String Conversion | .to_fixpt_ string() | Convert to fixpt string | N/A | Y | N/A |

| .to_string() | Convert to int string | Y | Y | N/A |

Note

To use floating point remainder, call the fmod or fmodf function from the <math.h> header.

Note that the floating-point remainder core can be very large when used in a pipeline, so it should be used with care. For the same reason, floating point remainder is only directly supported for the float type. For double, the inputs to the core will be cast down to float, and the result will be cast back to double. This can result in a loss of precision, or incorrect results when the double input is not representable in the range of float.

2.13.7. Image Processing Library¶

The LegUp image processing library provides C++ class/function APIs for a number of commonly used image processing operations. You can use these class/function APIs by including the following header file,

#include "legup/image_processing.hpp"

2.13.7.1. Line Buffer¶

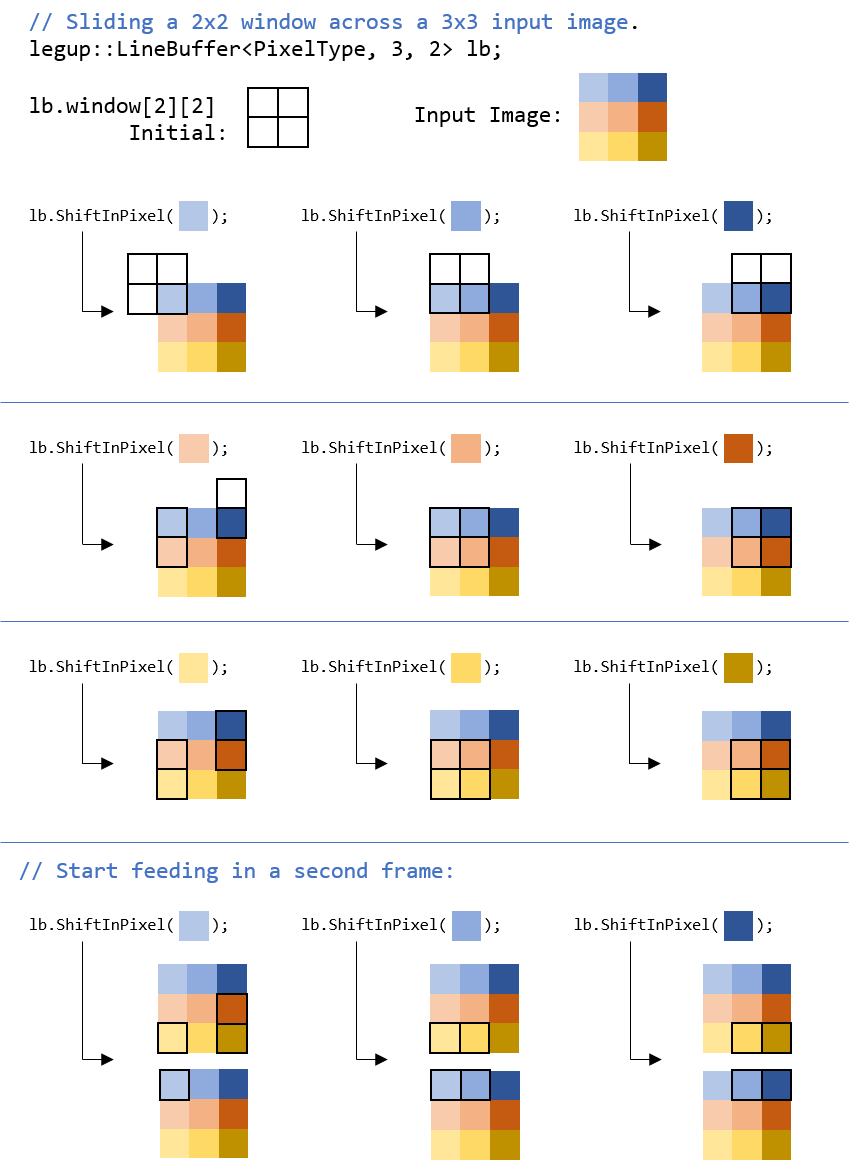

The LineBuffer class implements the line buffer structure that is commonly

seen in image convolution (filtering) operations, where a filter kernel is

“slided” over an input image and is applied on a local window (e.g., a square)

of pixels at every sliding location. As the filter is slided across the image,

the line buffer is fed with a new pixel at every new sliding location while

retaining the pixels of the previous image rows that can be covered for the

sliding window.

The public interface of the LineBuffer class is shown below,

template <typename PixelType, unsigned ImageWidth, unsigned WindowSize>

class LineBuffer {

public:

PixelType window[WindowSize][WindowSize];

void ShiftInPixel(PixelType input_pixel);

};

Below shows an example usage of the LineBuffer class:

- Instantiate the line buffer in your C++ code, with template

arguments being the pixel data type, input image width, and sliding window

size. The window maintained by the line buffer assumes a square

WindowSize x WindowSizewindow. If you are instantiating the line buffer inside a pipelined function (accepting a new pixel in every function call), you will need to add ‘static’ to make the line buffer static.

static legup::LineBuffer<unsigned char, ImageWidth, WindowSize> line_buffer;

- Shift in a new pixel by calling the

ShiftInPixelmethod:

line_buffer.ShiftInPixel(input_pixel);

- Then your filter can access any pixels in the

windowby:

line_buffer.window[i][j]

The figure below illustrates how the line buffer window is being updated

after each call of ShiftInPixel. You will notice that the window can

contain out-of-bound pixels at certain sliding locations.

For more details about when/why to use the LineBuffer class, see

Inferring a Line Buffer in the Optimization Guide.

2.14. LegUp C Library¶

LegUp also provides a number of C libraries for user convenience. For bit-level operations, we strongly recommend using our C++ Arbitrary Precision Data Types Library instead, but we provide this C bit-level operation library for user convenience, which can be used when the user does want to convert native C data types in an existing code to our C++ arbitrary precision data types. Note that using the C++ arbitrary precision data types may lead to more optimized hardware than using this C bit-level operation library. LegUp also provides support for some functions from the C Numerics Library (math.h in C / <cmath> in C++).

2.14.1. C Bit-Level Operation Library¶

LegUp provides a library to perform bit-level operations in hardware. These are functions that are easy to use and will lead to a more efficient hardware implementation than implementing the same operation in software. All of these functions can be compiled and correctly executed in software. You can use LegUp’s bit-level operation library by including the following header file:

#include "legup/bit_level_operations.h"

This library defines a number of C functions that perform common bit-level operations. The software implementation of the library matches to that of the generated hardware. Users can simulate the low-level bit operations accurately in software prior to generating its hardware.

Note

The index and width arguments must be constant integers and must be within the bit range of the variable being selected or updated.

2.14.1.1. Selecting A Range of Bits¶

unsigned long long legup_bit_select(unsigned long long v, unsigned char msb_index, unsigned char lsb_index);

This function selects a range of bits, from the msb_index bit down to the lsb_index bit (where the bit index starts from 0), from the input variable, v, and returns it.

The lower (msb_index - lsb_index + 1) bits of the return value are set to

the specified range of v, and the rest of the upper bits of the return value are set to 0.

The equivalent Verilog statement will be:

return_val[63:0] = { (64 - (msb_index - lsb_index + 1)){1'b0}, // Upper bits set to 0.

v[msb_index : lsb_index] // Selected bits of v.

};

2.14.1.2. Updating A Range of Bits¶

unsigned long long legup_bit_update(unsigned long long v, unsigned char msb_index, unsigned char lsb_index, unsigned long long value);

This function updates a range of bits, from the msb_index bit down to the lsb_index bit (where the bit index starts from 0), of the input variable, v, with the given value, value, and returns the updated value.

The equivalent Verilog statement will be:

return_val[63:0] = v[63:0];

return_val[msb_index : lsb_index] // Update selected range of bits.

= value[msb_index - lsb_index : 0]; // The lower (msb_index - lsb_index) bits of value.

2.14.1.3. Concatenation¶

unsigned long long legup_bit_concat_2(unsigned long long v_0, unsigned char width_0, unsigned long long v_1, unsigned char width_1);

This function returns the bit-level concatenation of the two input variables, v_0, and v_1.

The lower width_0 bits of v_0 are concatenated with the lower width_1 bits of v_1, with the v_0 bits being the upper bits of the v_1 bits.

The concatenated values are stored in the lower (width_0 + width_1) bits of the return value, hence if the bit-width of the return value is bigger than (width_0 + width_1), the rest of the upper bits of the return value are set to 0.

The equivalent Verilog statement will be:

return_val[63:0] = { (64 - width_0 - width_1){1'b0}, // Upper bits set to 0.

v_0[width_0 - 1 : 0], // Lower width_0 bits of v_0.

v_1[width_1 - 1 : 0] // Lower width_1 bits of v_1.

};